Special Analysis: HIPAA Compliance Risks of AI in the Lab: Trends and Tips

The most common AI compliance risk areas related to data security and patient privacy, and the current regulatory landscape

Do you know how artificial intelligence (AI) is being used in your laboratory? Though most lab leaders likely know if and how AI is being used in diagnostic processes, they may not be aware of how staff are using the technology for more mundane tasks or have clear policies in place regarding the use of AI. Such use could expose labs and other providers to Health Insurance Portability and Accountability Act (HIPAA) compliance risks.

Common compliance risk areas of AI in health care

HIPAA compliance attorney Aleksandra Vold, partner at law firm BakerHostetler, says that the main compliance issues when it comes to AI use in healthcare organizations involve two key areas:

- Staff unawareness of approved uses of AI

- Updates to software used by the lab (or other provider) to include AI elements

Risk Area 1: Lack of staff knowledge of acceptable uses of AI

When it comes to the first area, Vold says that, often, staff feel that because they aren’t disclosing protected health information (PHI) to a human or business entity, it’s OK to use free AI products such as ChatGPT in administrative tasks such as generating letters or emails to patients.

Vold stresses that entering PHI into generative AI products for such purposes is not a permitted disclosure if the AI vendor is not a business associate, and lab leaders need to ensure their staff know this. Banning the use of free AI products in general is a good idea, she adds. Labs and other providers also need clear policies on the use of AI in the organization and ensure that staff are going through the compliance department before adding or using new products such as generative AI.

Risk Area 2: Software updates that add AI features

Another risk area Vold says she sees often occurs when vendors update software used by labs and other healthcare providers to include AI features. She points out that because the software has already been purchased and a risk assessment done, lab leaders may not always pay close attention to software updates and may be unaware that AI features are being introduced, or how those features are using the lab’s data.

“Compliance issues need to be addressed anytime we’re seeing an update from a vendor,” she says, adding that compliance officers and other lab leaders need to look carefully at the release notes for the update and, if an AI feature is involved, ask the vendor a few key questions, including:

- What does this update mean?

- Have you licensed the AI?

- What are the terms and conditions?

- What is the AI provider allowed to do with the lab’s data?

- Is the lab’s data going to be transferred, and if so, how will it be transferred?

Once the lab has the answers to these key questions, they’ll have a better idea about if the AI’s use of their data is HIPAA compliant, and even if it is, if they are comfortable with that usage, Vold says.

She adds that she doesn’t believe vendors are intentionally sneaking AI into their products—in fact, they often excitedly announce such features on social media and other channels—but these updates may not be on compliance teams’ radars. Following key vendors on social media to stay on top of these announcements is another way compliance departments can stay aware of how AI may be creeping into their labs, Vold says.

General tips to avoid compliance issues with AI

In general, Vold says that it’s best to be overly cautious when it comes to AI use in the lab as the threshold for what’s considered an unapproved disclosure of PHI—and thus a HIPAA violation—is low. She adds that the U.S. Department of Health and Human Services (HHS) Office of Civil Rights (OCR) and other branches of government are becoming stricter when it comes to what’s considered a HIPAA violation and what labs and other covered entities need to do to ensure compliance.

Essentially all third parties that will be handling PHI from the lab will require a business associate agreement to ensure HIPAA compliance, even in cases where they may have limited access to the lab’s data, Vold says. For example, in its recently released “Guidance on HIPAA & Cloud Computing,” HHS stresses that even cloud services providers (CSPs) that are only handling encrypted data are “not exempt…from business associate status and obligations under the HIPAA Rules,” even if they do not have an encryption key for that data. “The covered entity (or business associate) and the CSP must enter into a HIPAA-compliant business associate agreement (BAA), and the CSP is both contractually liable for meeting the terms of the BAA and directly liable for compliance with the applicable requirements of the HIPAA Rules.”1

Create an AI strategy and guiding principles

Vold says that coming up with guiding principles and an AI strategy is essential for lab and compliance leaders to help manage the risks posed by AI. These principles and strategy should cover what the lab team wants to accomplish with AI and what they want to avoid. Setting up an AI governing council or committee is another step to ensure such principles are followed and that the lab is taking a consistent approach to AI, she adds. Such a committee also helps show that AI “isn’t an afterthought [and] the organization is not averse to the use of AI,” providing staff with someone to go to if they have questions. This group will also act as the lab’s “boots on the ground” to get a better idea of how staff are using AI in the lab and ensure staff are having AI tools vetted by the compliance department before using them.

Evaluating HIPAA compliance risks of AI products

When looking at AI products for the lab, Vold says leaders and compliance officers need to “dig deep on the data use” and carefully look at the terms of the contract. Here are a few key areas Vold suggests lab leaders focus on:

What are the secondary use rights?

These relate to if the AI will use the lab’s PHI to train the AI algorithm. “Sometimes, they can train just your AI model for your particular organization, with your data, which is great, because [labs and other providers] want the benefit of training, but don’t want everyone” to use the data or algorithm, Vold explains.

What are the terms and conditions of the AI provider?

Vold explains that many lab vendors don’t create the AI features those vendors integrate into their products, but instead license other entities’ AI tools. Knowing what terms and conditions the lab vendor has with that AI provider, and if the lab vendor has signed a business associate agreement with that upstream AI provider, is therefore critical to understanding how the lab’s data may be used.

Vold adds that lab leaders should treat the AI vendor the same as other third parties that are considered business associates under HIPAA, even if there may be pushback and assurances from the vendor that everything is fine. She points out that selling to the healthcare industry is difficult and even vendors with the best intentions may not be up to speed on the terms and conditions they signed as part of their license with the AI provider. The vendor may therefore not even know exactly how their clients’ data will be used.

Evaluating AI vendors

Vold recommends that lab leaders and compliance departments focus on the parts of HIPAA that apply to business associates when evaluating AI vendors, asking questions such as:

- What is your HIPAA compliance program?

- What is your data retention and destruction policy?

- What is your use and disclosures policy?

Vold says that labs should also consider questions the OCR would ask if there were a breach involving the AI vendor:

- Where is your business associate contract?

- Did you do an audit to determine if they are in compliance with their own policies?

- Did you do a risk assessment? Was that considered in your regular enterprise-wide security risk analysis?

“AI is not shorthand for, ‘it’s fine,’” Vold stresses. “AI [used in] healthcare is still a product that’s going to receive PHI… regardless of how shiny and new [AI] is, you need to do the exact same HIPAA compliance review.”

Trends in AI compliance

A tough stance by HHS OCR

Vold reiterates that there is a low threshold for what is considered a disclosure of PHI, and all 18 identifiers must be removed for PHI to be considered “de-identified” such that it can be shared by a covered entity with a third party without the patient’s permission.2 She adds that the HHS OCR has a hard stance on what information is considered identifiable. For example, HHS OCR recently found that the disclosure of patients’ names along with only an amount owed to a provider was PHI, even though there was no additional identifying or clinical information.3 So, labs need to be especially careful about any patient data shared with an AI vendor, ensuring they are “following the Notice of Privacy Practices and then getting authorization for any additional sharing,” Vold explains.

Vold adds that the HHS OCR has become stricter in recent years regarding HIPAA rules related to protecting data and limiting use, reuse, and sharing. For example, in a recent bulletin related to covered entities’ use of tracking technologies and HIPAA compliance, the OCR took the position that an IP address alone on an unauthenticated web page is PHI.4 Vold explains that though that part of OCR’s guidance was overturned by a recent court ruling, it illustrates the low threshold the HHS OCR has when it comes to what counts as a disclosure of PHI. That stance is unlikely to change soon, Vold says, so labs would do best to be stringent when it comes to AI and how PHI data is being used by these products.

The future of HIPAA compliance and AI

However, as there hasn’t yet been a breach involving either a healthcare AI product or the AI features of a healthcare product, both regulators and compliance departments don’t yet have a clear roadmap when it comes to AI. Vold says that until such a breach happens, current HIPAA regulations are unlikely to change soon.

“A lot of compliance departments are saying things like, ‘we don’t even know what AI is being used or how long [data is] being retained’…so they don’t know how much to care,” she says. “I think the same is true with regulators, because the narrative around AI is that ‘it’s transient, we don’t keep [the data], we’re not processing the data, we’re not keeping it in an identifiable way—it’s all encrypted.’” That narrative hasn’t been tested the hard way yet, Vold adds.

First major breach will likely bring more certainty to AI use regulations

Because of the uncertainty around AI and how it’s being used, Vold says regulations and compliance guidance won’t really solidify until that first big breach, which will likely have a significant impact, due to the sheer amount of data involved in each AI product.

“It’s hard to conceptualize without knowing the worst-case scenario,” she says, adding that, once that scenario happens, there will likely be a flurry of compliance activity and more certainty around regulations.

Recent HHS efforts to address AI in healthcare

| Though there isn’t much AI-specific HIPAA compliance guidance available, the HHS has taken steps to address AI. The department set up an Office of Chief Artificial Intelligence Officer (OCAIO) in March 2021 to help implement its own AI strategy throughout the department.5 Its Office of the National Coordinator for Health Information Technology also published a final rule related to AI and healthcare IT in January 2024. That rule includes certification requirements for health IT developers as well as “enhancements to support information sharing under the information blocking regulations.” It also addresses the “responsible development and use of artificial intelligence” in health care.6

Some of the AI and HIPAA-related issues discussed in the final rule include:6 • Ensuring that the need to protect patient data and comply with HIPAA doesn’t “stifle innovation or have a chilling effect on beneficial uses of this emerging tool.” However, the rule is more focused on developers of AI products and doesn’t provide specific guidance on how labs can ensure HIPAA compliance when it comes to using AI. HHS does offer a list of the AI-focused laws, regulations, executive orders, and memoranda that “drive HHS’s AI efforts” on its website, which may give labs and other providers a sense of how HHS will enforce HIPAA compliance involving AI.7 The list includes the document, “Guidance for Regulation of Artificial Intelligence Applications,” released by the White House’s Office of Management and Budget in November 2020, along with HHS’s response to that guidance.8,9 In that response, HHS notes that “this plan represents a moment in time and will continue to evolve as HHS works with the broader industry to ensure that AI is applied in a manner that promotes the health and well-being of all Americans while preserving the public’s trust in how data is collected, stored, and used.”9 The document mentions three key areas related to HIPAA and AI:9 • “The HITECH Act indirectly authorizes HHS to regulate AI applications by establishing requirements for the safeguarding and notification of a breach of protected health information which may occur through use of an AI application by a HIPAA regulated entity” |

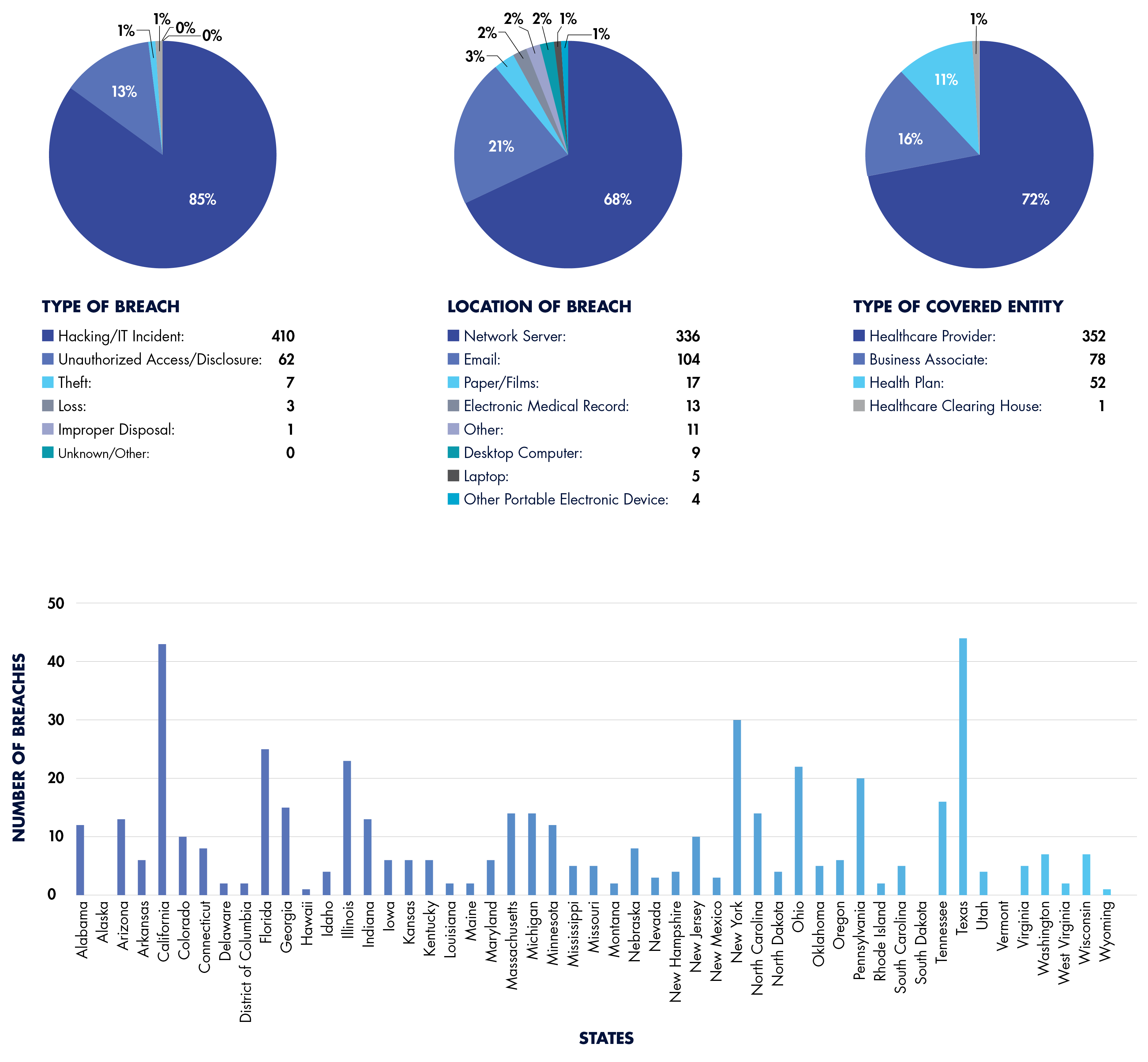

A Breakdown of Reported 2024 HIPAA Breaches (So Far)

Top 10 HIPAA Breaches by Number of Individuals Affected

| Covered Entity | Number of Individuals Affected by Breach |

| Change Healthcare | 100,000,000 |

| Kaiser Foundation Health Plan | 13,400,000 |

| HealthEquity | 4,300,000 |

| Concentra Health Services | 3,998,163 |

| Centers for Medicare & Medicaid Services | 3,112,815 |

| Acadian Ambulance Service | 2,896,985 |

| A&A Services d/b/a Sav-Rx | 2,812,336 |

| WebTPA Employer Services (WebTPA) | 2,518,533 |

| INTEGRIS Health | 2,385,646 |

| Medical Management Resource Group | 2,350,236 |

References:

- https://www.hhs.gov/hipaa/for-professionals/special-topics/health-information-technology/cloud-computing/index.html

- https://www.g2intelligence.com/are-current-regulations-keeping-labs-from-benefiting-from-ai/

- https://www.hhs.gov/sites/default/files/signed-ra-sentara-508.pdf

- https://www.hhs.gov/hipaa/for-professionals/privacy/guidance/hipaa-online-tracking/index.html

- https://www.hhs.gov/programs/topic-sites/ai/index.html

- https://www.federalregister.gov/documents/2024/01/09/2023-28857/health-data-technology-and-interoperability-certification-program-updates-algorithm-transparency-and

- https://www.hhs.gov/programs/topic-sites/ai/statutes/index.html

- https://www.whitehouse.gov/wp-content/uploads/2020/11/M-21-06.pdf

- https://www.hhs.gov/sites/default/files/department-of-health-and-human-services-omb-m-21-06.pdf

- https://ocrportal.hhs.gov/ocr/breach/breach_report.jsf

Subscribe to view Elite

Start a Free Trial for immediate access to this article